This is a weekly newsletter about the art and science of building and investing in tech companies. To receive Investing 101 in your inbox each week, subscribe here:

This week I got to publish a deep dive I’ve been working on for a long time! Any big undertaking to write about AI is sure to be immediately outdated. But we tried to unpack the history, both of the AI moment we’re experiencing right now, and the issues the technology is facing that is putting experts, ideas, and institutions along an ideological spectrum from closed off to open source.

Special thanks to my co-authors; Alex Liu, who is a Research Fellow with Contrary Research, and a software engineer at Fieldguide, and then the co-founders of Nomic, both Brandon Duderstadt and Andriy Mulyar. This week, Contrary announced that we led Nomic’s seed round last year, and that they’ve recently raised a Series A from Coatue where we participated alongside exceptional angles like Amjad Masad, the CEO of Replit, Clem Delangue, the CEO of Hugging Face, and Naval Ravikant.

Check out the full deep dive here. To get a taste, here’s just the first section: The Rise of OpenAI.

The Rise of OpenAI

Artificial intelligence has been in the dreams of technologists since at least the 1950s, when Alan Turing first published “Computing Machinery and Intelligence.” Since then, the progression of intelligent technology has consisted of many leaps forward followed by a few steps back. Before fundamentally intelligent systems were possible, computational power and the availability of data had to reach critical mass. Incremental progress in machine learning and data science gave rise to their gradual ascendance. As Moore’s Law kept pace, and the internet brought about the age of “big data”, the stage was set for the emergence of legitimate AI.

In 2012, a groundbreaking paper was published called "ImageNet Classification with Deep Convolutional Neural Networks.” The authors, Dr. Geoffrey Hinton and his two grad students Ilya Sutskever and Alex Krizhevsky, introduced a deep convolutional neural network (CNN) architecture called AlexNet, which represented a big leap forward in image classification tasks. One key breakthrough was that AlexNet could be trained on a GPU, allowing it to harness much more computational power than algorithms trained on CPU alone. The paper also led to a number of other influential papers being published on CNNs and GPUs in deep learning. Then, in 2015, OpenAI was founded as a non-profit with the goal of building AI for everyone. In the company’s founding announcement, OpenAI emphasized its focus on creating value over capturing it:

“As a non-profit, our aim is to build value for everyone rather than shareholders. Researchers will be strongly encouraged to publish their work, whether as papers, blog posts, or code, and our patents (if any) will be shared with the world.”

The company regarded its openness as a competitive edge in hiring, promising “the chance to explore research aimed solely at the future instead of products and quarterly earnings and to eventually share most—if not all—of this research with anyone who wants it.” The type of talent that flocked to OpenAI became a who’s-who of expertise in the field.

By 2014, the market rate for top AI researchers matched the pay for top quarterback prospects in the NFL. This kind of pay, offered by large tech companies like Google or Facebook, was viewed as a negative signal by some of the early people involved in building OpenAI. For example, Wojciech Zaremba, who had previously spent time at NVIDIA, Google Brain, and Facebook, became the co-founder of OpenAI after feeling more drawn to OpenAI’s mission than the money:

“Zaremba says those borderline crazy offers actually turned him off—despite his enormous respect for companies like Google and Facebook. He felt like the money was at least as much of an effort to prevent the creation of OpenAI as a play to win his services, and it pushed him even further towards the startup’s magnanimous mission.”

A Shift In The Narrative

As OpenAI continued to progress in its research, the company increasingly became focused on the development of artificial general intelligence (AGI). In March 2017, OpenAI’s leadership decided the company’s non-profit status was no longer feasible if it was going to make real strides towards AGI, because as a non-profit it wouldn’t be able to secure the massive quantity of computational resources it would need.

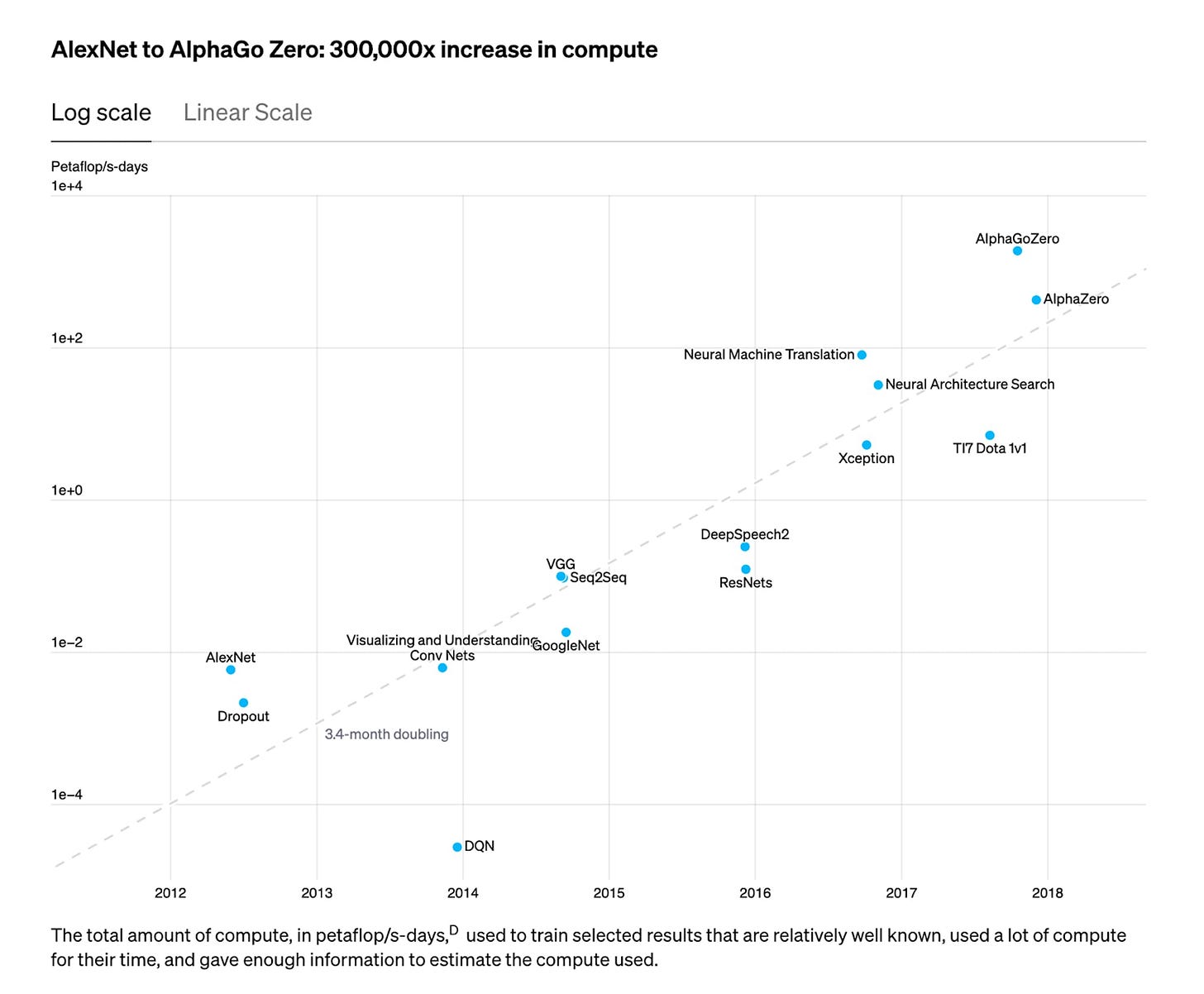

In May 2018, OpenAI published research into the history of the compute required to train large models, showing that compute had increased 300,000x, doubling every 3.4 months, since 2012.

Then, in June 2018, OpenAI published “Improving Language Understanding by Generative Pre-Training,” the first introduction of the GPT model, a transformer-based neural network architecture designed for language generation tasks. The model built on top of transformer architecture that was introduced in “Attention Is All You Need,” a foundational paper published in June 2017.

The types of models OpenAI wanted to create, like GPT and later DALL-E, were going to require significant amounts of compute. The leaders of OpenAI, like Greg Brockman, argued that in order to “stay relevant” they were going to need more resources - a lot more resources. As a first step, Sam Altman turned to Reid Hoffman and Vinod Khosla. Between that initial shift and April 2023, OpenAI would go on to raise over $11 billion in funding.

OpenAI’s new charter, published in April 2018, demonstrated the subtle changes to the business. The company still emphasized its openness and “universal mission”:

“We commit to use any influence we obtain over AGI’s deployment to ensure it is used for the benefit of all, and to avoid enabling uses of AI or AGI that harm humanity or unduly concentrate power.”

However, the charter also emphasized the company’s need to “marshal substantial resources to fulfill our mission.” Later, in March 2019, OpenAI explained how it intended to balance its fundamental mission while taking on investors with financial incentives by announcing “OpenAI LP.” The corporate structure would enable OpenAI to take on investor capital, but cap the returns its investors could potentially make, funneling any additional return to OpenAI’s non-profit arm.

Crucially, OpenAI’s new charter scaled back on the promise of openness, pointing to safety and security as the justification for this:

“We are committed to providing public goods that help society navigate the path to AGI. Today this includes publishing most of our AI research, but we expect that safety and security concerns will reduce our traditional publishing in the future, while increasing the importance of sharing safety, policy, and standards research.”

It’s important to acknowledge that, with investors who have a financial incentive tied to the size of the outcome for OpenAI, competition can become a factor. When companies are required to generate a financial return, beating your competition becomes much more important. That increased emphasis on competition often takes precedence over openness.

Building A War Chest For Scale

After the announcement of OpenAI LP, the company didn’t waste any time garnering the resources its leadership team believed it desperately needed. In July 2019, Microsoft invested $1 billion in OpenAI, half of which was later revealed to have taken the form of Azure credits that would allow OpenAI to use Microsoft’s cloud products basically for free. OpenAI’s cloud spending scaled rapidly after this deal. In 2017, OpenAI spent $7.9 million on cloud computing. This climbed to $120 million in 2019 and 2020 combined. OpenAI had previously been one of Google Cloud’s largest customers, but after Microsoft’s investment, OpenAI started working exclusively with Azure.

In 2020, Microsoft announced it had “developed an Azure-hosted supercomputer built expressly for testing OpenAI's large-scale artificial intelligence models.” That supercomputer is powered by 285K CPU cores and 10K GPUs, making it one of the fastest systems in the world. Armed with Microsoft’s computational resources, OpenAI dramatically increased the pace at which it shipped new products.

In line with OpenAI’s focus on compute resources, the company increasingly pursued an approach where scale mattered. A 2020 profile in the MIT Technology Review described OpenAI’s focus on scale as follows:

“There are two prevailing technical theories about what it will take to reach AGI. In one, all the necessary techniques already exist; it’s just a matter of figuring out how to scale and assemble them. In the other, there needs to be an entirely new paradigm; deep learning, the current dominant technique in AI, won’t be enough… OpenAI has consistently sat almost exclusively on the scale-and-assemble end of the spectrum. Most of its breakthroughs have been the product of sinking dramatically greater computational resources into technical innovations developed in other labs.”

That increased access to computational resources allowed OpenAI to continuously improve the capability of its models, eventually leading to the announcement of GPT-2 in February 2019. However, while OpenAI continued to pursue scale, the company also seemed to increasingly prefer secrecy. As part of the rollout of GPT-2, OpenAI argued that the full model was too dangerous to release all at once, and instead conducted a staged release before releasing the full model in November 2019. Britt Paris, an assistant professor at Rutgers University focused on AI disinformation, argued that “it seemed like OpenAI was trying to capitalize off of panic around AI.”

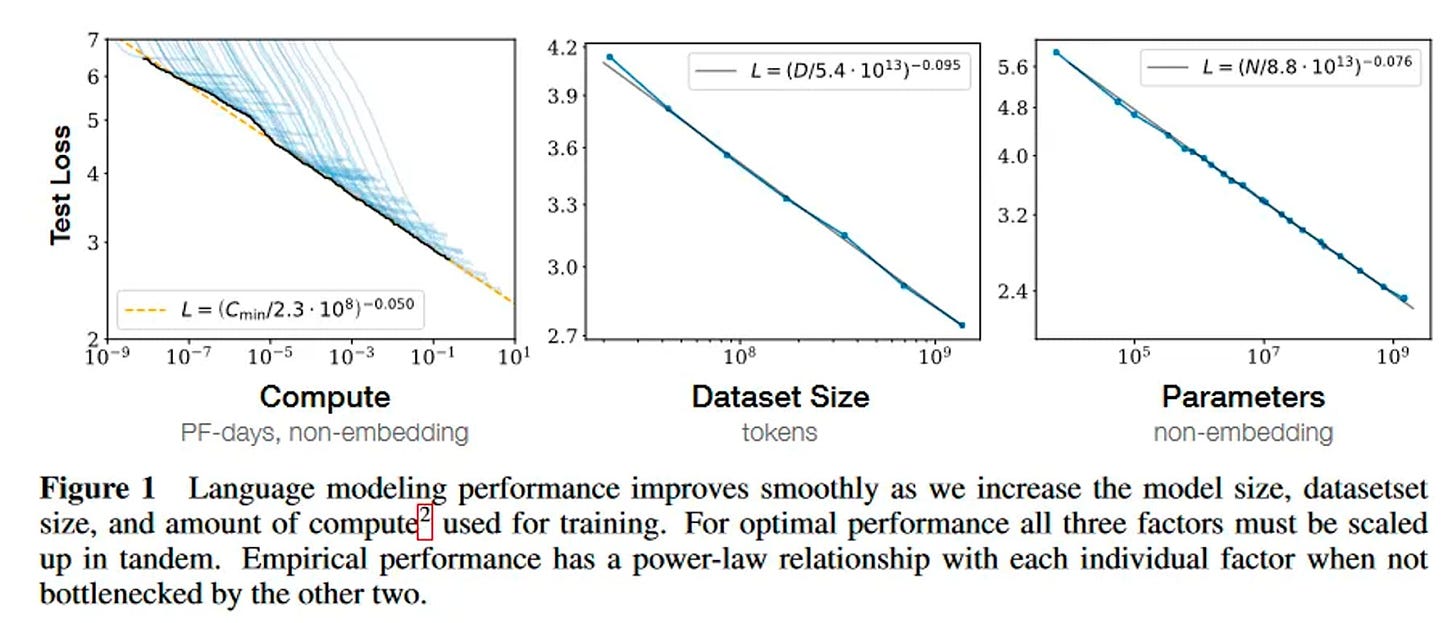

In January 2020, OpenAI researcher and Johns Hopkins professor Jared Kaplan, alongside others, published "Scaling Laws for Neural Language Models”, which stated:

“Language modeling performance improves smoothly and predictably as we appropriately scale up model size, data, and compute. We expect that larger language models will perform better and be more sample efficient than current models.”

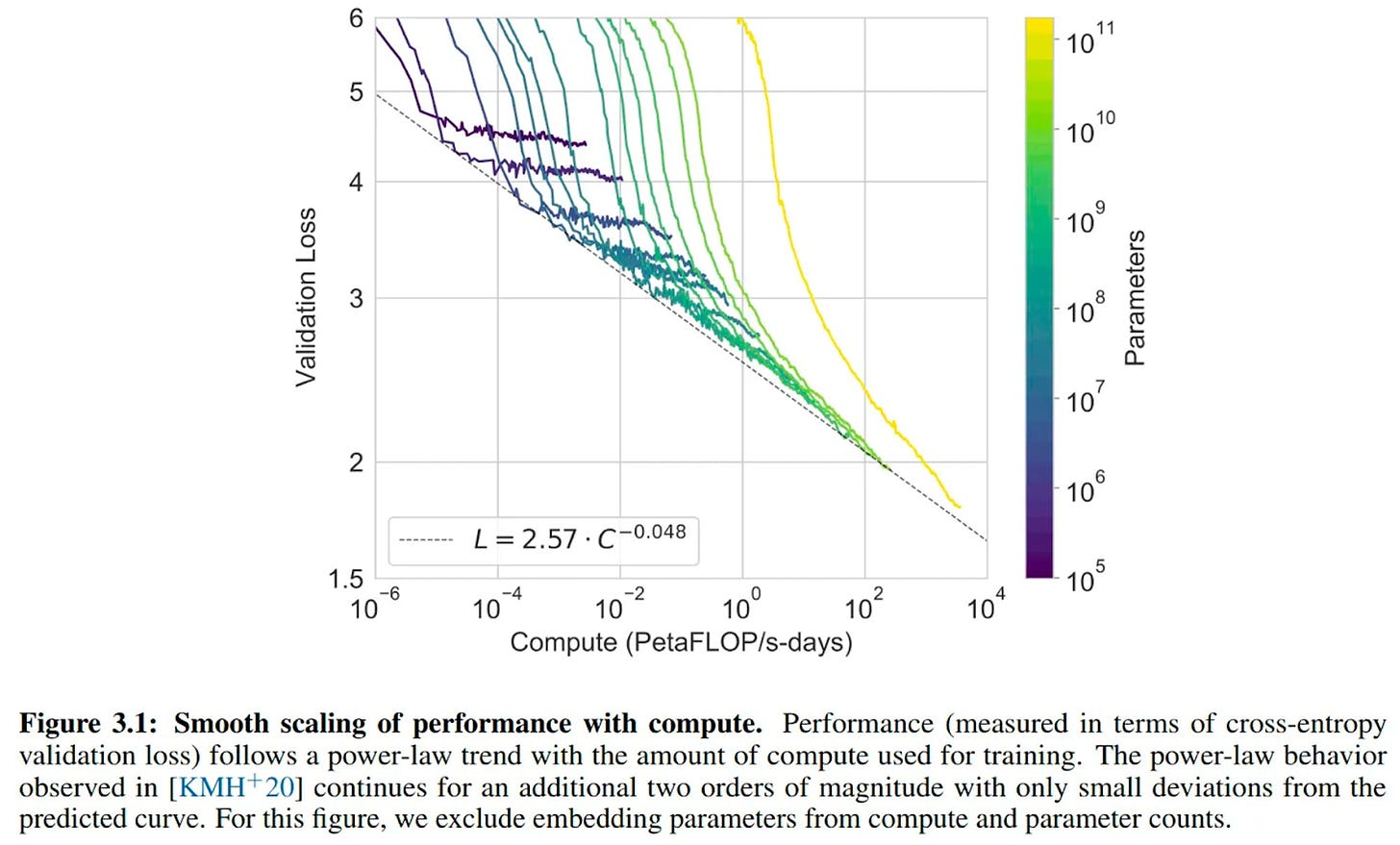

Again, the importance of compute and scale became front and center to the progression of OpenAI’s models. The focus on scale was reinforced in May 2020 when OpenAI published a paper on GPT-3, "Language Models are Few-Shot Learners”, that demonstrated smooth scaling of performance with increased compute.

Furthermore, OpenAI found that increasing scale also improves generalizability, arguing “scaling up large-language models greatly improves task-agnostic, few-shot performance, sometimes even reaching competitiveness with prior state-of-the-art fine-tuning approaches.” Gwern Branwen, a freelance researcher, coined The Scaling Hypothesis in a blog post, and stated:

“GPT-3, announced by OpenAI in May 2020, is the largest neural network ever trained, by over an order of magnitude… To the surprise of most (including myself), this vast increase in size did not run into diminishing or negative returns, as many expected, but the benefits of scale continued to happen as forecasted by OpenAI."

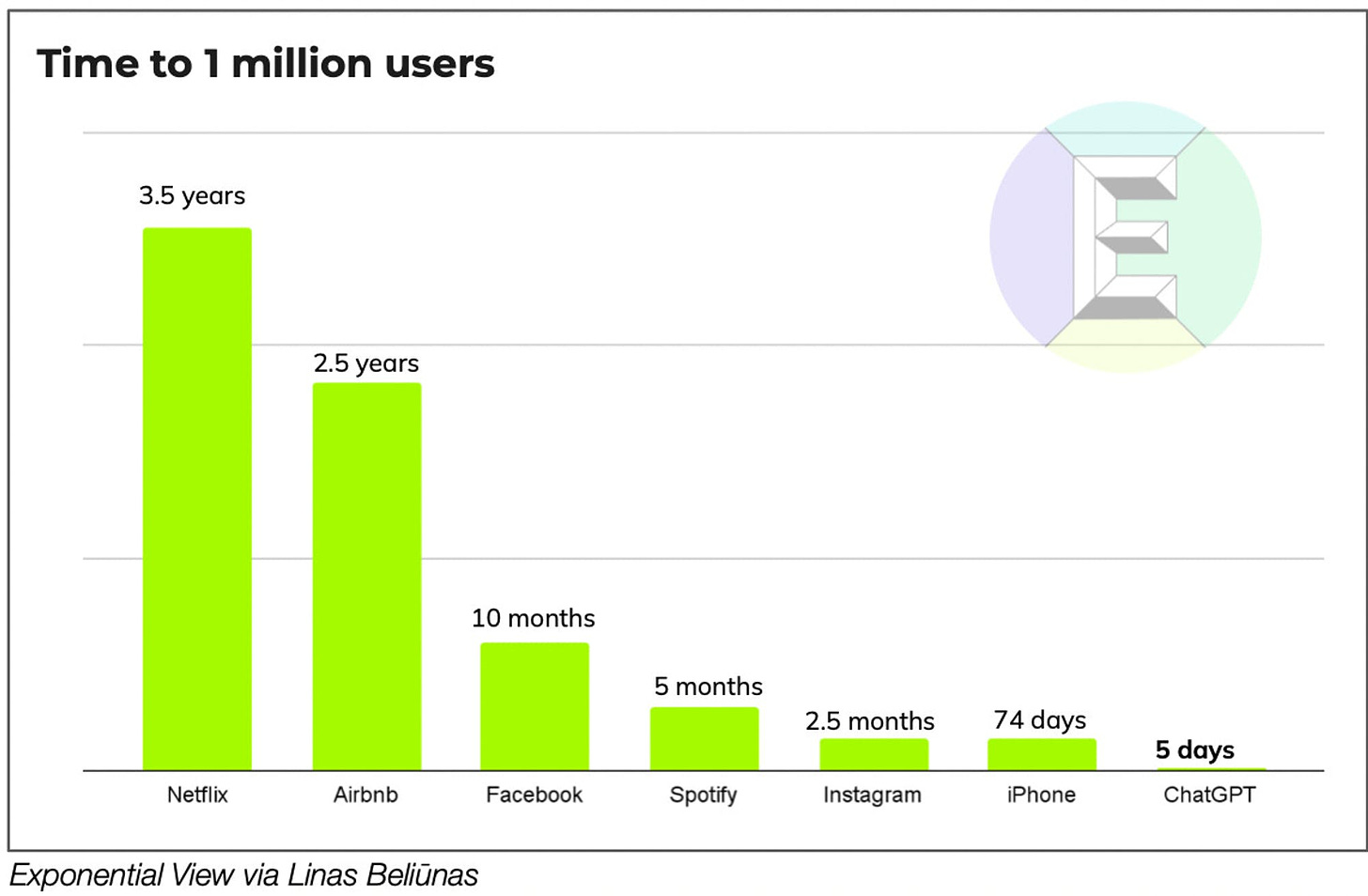

The success of OpenAI’s research did not stay confined to academics or AI-interested circles. It burst onto the public scene with the launch of ChatGPT on November 30th, 2022, which reached 1 million users after five days. Two months after the launch of ChatGPT, in January 2023, it had become the fastest-growing consumer product ever with 100 million MAUs. That same month, Microsoft doubled down on OpenAI with a new $10 billion investment. Many also began to believe that ChatGPT represented the first legitimate potential threat to Google’s dominance in search in decades.

Internally, OpenAI projected $200 million of revenue in 2023 and $1 billion by 2024. But despite their positive external reception and expected financial success, OpenAI’s products came with a cost. One report estimated that OpenAI’s losses in 2022 doubled to $540 million, with Sam Altman expecting to raise as much as $100 billion in the subsequent years to fund its continued efforts to reach scale. This would make it “the most capital-intensive startup in Silicon Valley history.” ChatGPT alone had costs that Sam Altman himself described as “eye watering,” estimated to cost $700K per day to keep the service running.

While the success of ChatGPT and the seemingly endless resources from Microsoft made OpenAI a leading player in the world of AI, the approach the company had taken didn’t sit right with everyone. Several people involved in the early vision of the company saw OpenAI’s evolutionary process as moving it further and further from the openness implied in the company’s name.

From OpenAI To ClosedAI

OpenAI’s shift from non-profit to for-profit took place over the course of 2017 and early 2018, before being announced in April 2018. The cracks between the original ethos and the current philosophy started showing even before this announcement. In June 2017, Elon Musk, one of the original founders of OpenAI, had already poached OpenAI researcher Andrej Karpathy, a deep learning expert, from the company to become head of Tesla’s autonomous driving division in an early sign of internal conflict at OpenAI.

In February 2018, OpenAI announced that Elon Musk would be stepping down from the company’s board. After his departure, Elon Musk failed to contribute the full $1 billion in funding he had promised the company, after having only contributed $100 million before leaving. While both Musk and OpenAI claimed the departure was due to increasing conflict of interest, that story has become more nuanced. In March 2023, it was revealed that Musk “believed the venture had fallen fatally behind Google… Musk proposed a possible solution: He would take control of OpenAI and run it himself.” The company refused, and Musk parted ways.

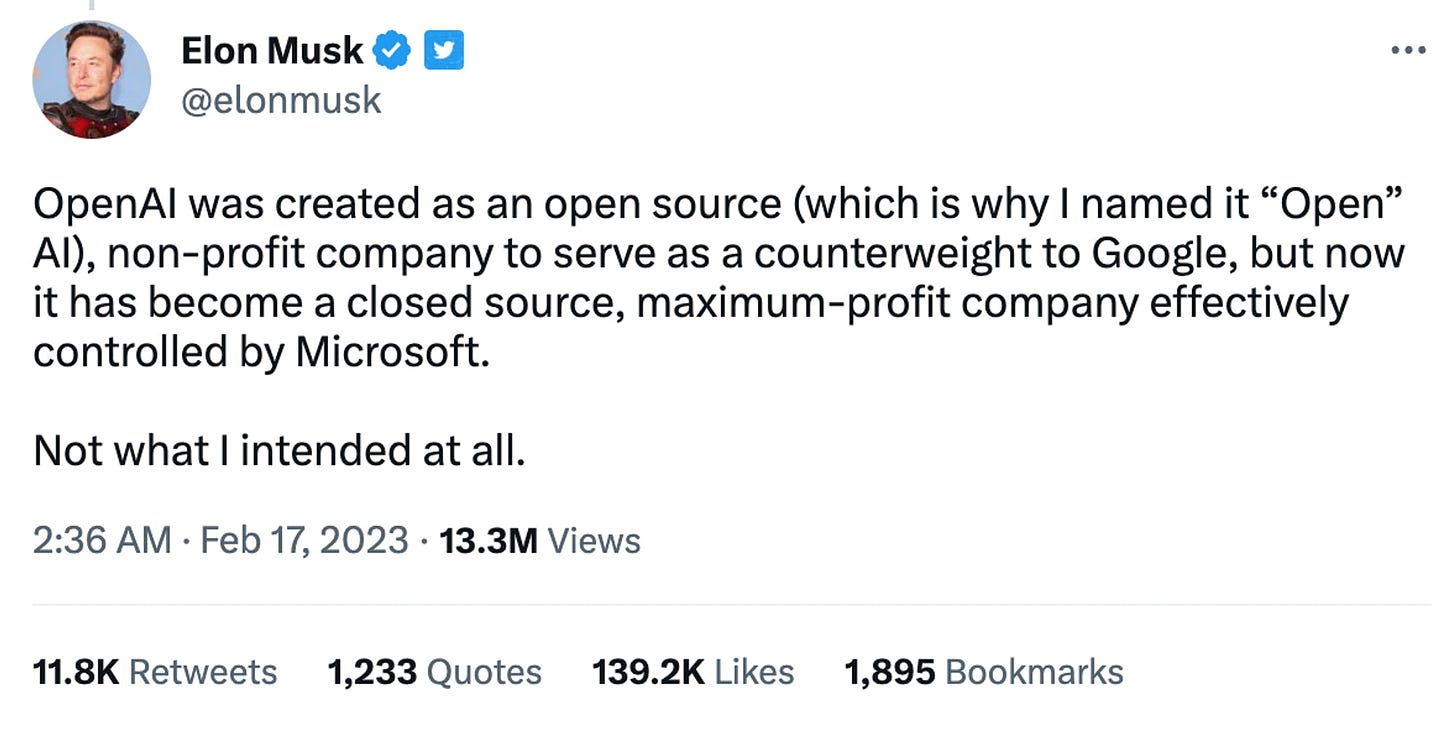

Later, after the success of ChatGPT and Microsoft’s $10 billion investment into OpenAI, Elon Musk became more vocal about his criticism and existential disagreements with the company.

Elon Musk wasn’t alone in those criticisms. When OpenAI published its new charter, and announced OpenAI LP, the company touted its focus on avoiding work that would “unduly concentrate power.” In March 2019, people expressed their issues with OpenAI’s position. The idea that capping investor returns at 100x was, in any way, a deterrent for typical financially-driven incentives, drew criticism. In one Hacker News post, a commenter made this point:

“Early investors in Google have received a roughly 20x return on their capital. Google is currently valued at $750 billion. Your bet is that you'll have a corporate structure which returns orders of magnitude more than Google on a percent-wise basis (and therefore has at least an order of magnitude higher valuation), but you don't want to "unduly concentrate power"? How will this work? What exactly is power, if not the concentration of resources?”

In tandem with the success of ChatGPT, OpenAI became increasingly closed off from its initial vision of sharing its research. In March 2023, OpenAI announced GPT-4, the company’s first effectively closed model. In announcing GPT-4, the company argued that concerns over competition and safety prevented the model from a more open release:

“Given both the competitive landscape and the safety implications of large-scale models like GPT-4, this report contains no further details about the architecture (including model size), hardware, training compute, dataset construction, training method, or similar.”

In an interview around the same time, Ilya Sutskever, OpenAI’s chief scientist and co-founder, explained why OpenAI had changed its approach to sharing research:

“We were wrong. Flat out, we were wrong. If you believe, as we do, that at some point, AI — AGI — is going to be extremely, unbelievably potent, then it just does not make sense to open source. It is a bad idea... I fully expect that in a few years it’s going to be completely obvious to everyone that open-sourcing AI is just not wise.”

Members of the AI community have since criticized this decision. Ben Schmidt, the VP of Information Design at Nomic*, explained that “not being able to see what data GPT-4 was trained on made it hard to know where the system could be safely used and come up with fixes.“ These comments came after a Twitter thread explaining the inherent limitations of such a closed approach.

One of the biggest limitations that users are left with when OpenAI closes off context for its models is an understanding of the potential weightings or biases inherent in the model. For example, one review of ChatGPT indicated that it demonstrates political bias with a “pro-environmental, left-libertarian orientation.” Beyond specific instances of bias, Ben Schmidt further explained that “to make informed decisions about where a model should *not* be used, we need to know what kinds of biases are built in.” Sasha Luccioni, Research Scientist at Hugging Face, explained the limitations that OpenAI’s approach creates for scientists:

“After reading the almost 100-page report and system card about GPT-4, I have more questions than answers. And as a scientist, it's hard for me to rely upon results that I can't verify or replicate.”

Beyond case-specific instances of replicability, or bias, there were other critics whose focus was more on the implications for AI research as a whole. A discipline that has borrowed heavily from academia was now facing a much more top-down corporate level of control in the form of OpenAI’s alignment with Microsoft. William Falcon, CEO of Lightning AI and creator of PyTorch Lightning, explained this critique in a March 2023 interview:

“It’s going to set a bad precedent… I’m an AI researcher. So our values are rooted in open source and academia. I came from Yann LeCun’s lab at Facebook,…. Now, because [OpenAI has] this pressure to monetize, I think literally today is the day where they became really closed-source.”

As Sam Altman’s priorities for OpenAI continued to evolve starting in 2020 up to the launch of GPT-4, the changes didn’t just cause public debates on profit vs. non-profit or closed vs. open, nor did they just spark a conflict between Altman and Musk.

Instead, OpenAI’s approach “forces it to make decisions that seem to land farther and farther away from its original intention. It leans into hype in its rush to attract funding and talent, guards its research in the hopes of keeping the upper hand, and chases a computationally heavy strategy—not because it’s seen as the only way to AGI, but because it seems like the fastest.” That approach has caused real breaking points for several people working at OpenAI.

Most notably, Dario Amodei, former VP of Research at OpenAI, left to found rival AI startup Anthropic. In doing so he took 14 researchers with him, including OpenAI’s former policy lead Jack Clark. While Amodei left in December 2020, it’s been reported that “the schism followed differences over the group’s direction after it took a landmark [$1 billion] investment from Microsoft in 2019.” OpenAI would produce several alumni that would go on to start other AI companies, like Adept or Perplexity AI. But increasingly, OpenAI’s positioning would put a number of different AI experts both inside and outside the company on different ends of the spectrum between open and closed.

Be sure to check out the full deep dive here where we unpack the AI family tree, the issues being tackled in AI, and the role that open source is playing in that evolution.

Thanks for reading! Subscribe here to receive Investing 101 in your inbox each week: