This is a weekly newsletter about the art and science of building and investing in tech companies. To receive Investing 101 in your inbox each week, subscribe here:

We all crave individuality. We want to feel like we are our own person. A person with unique perspectives and charm. And I don't mean the grander argument about free will, I'm just talking about how most people want to see their own decision making capabilities. We all want to be non-conformists, just like everybody else.

I was reminded of the scene from Life of Brian where's he's speaking to a crowd, encouraging them to think for themselves: "You're all individuals!" The crowd repeats back, "We're all individuals!" Except one guy in the front, who sheepishly responds, "I'm not," before getting shushed.

People are like that. Seeking non-conformity through conformity. The more you pay attention to the way people make decisions, the more you see thousands of years of social creature / herd behavior rear its head. It may start to feel like the path to success is more likely to come from Forrest Gump's military strategy:

But after a while, the conformity starts to feel insane. You start to feel red pilled, and notice cracks in the reasons why people do things. The psychology behind everybody's beliefs can sometimes be much louder than the beliefs people are screaming on Twitter. Like Truman on The Truman Show starting to see the cracks in his reality.

You can start to feel trapped. You know you're being lied to, or things are being exaggerated. But in reality, starting to recognize the incentives that drive most people's behavior can be the most freeing thing available to you. In fact, staying under the illusion that people are free to have whatever belief they want to believe in, independent of incentives; that's the strongest prison of all.

"None are more hopelessly enslaved than those who falsely believe they are free." (Johann Wolfgang von Goethe)

Unfortunately, a LOT of people's philosophical stances are more reflective of the famous Upton Sinclair quote:

"It is difficult to get a man to understand something, when his salary depends on his not understanding it."

The same is painfully true in venture capital. I've written before about the Bubble Brains in venture. But the thing I failed to emphasize was that sometimes we think the Bubble Brains are just the masses. The dummies. The most programmable. But in reality, people in positions of power and influence are just as likely to be Bubble Brains because their financial incentives are the driving force for them.

Investing: A Leading? Or Lagging Indicator of Beliefs?

In a perfect world, investing should be an act of taking your world view and deploying capital to try and shape reality to that world view. If you believe a future is optimal, you should try to help build it. Investments become a lagging indicator of your beliefs. Often, however, the opposite happens. Whatever investments you end up making can play a big role in shaping your world view. Investments become a leading indicator of your beliefs.

One example from my own career. I had an acquaintance who was a fairly tenured investor at a different big established firm, and we would interact frequently. While I was at Coatue, we invested in Snyk. If you're not familiar with the company, the only critical part to understand for this story is that Snyk is a developer security product.

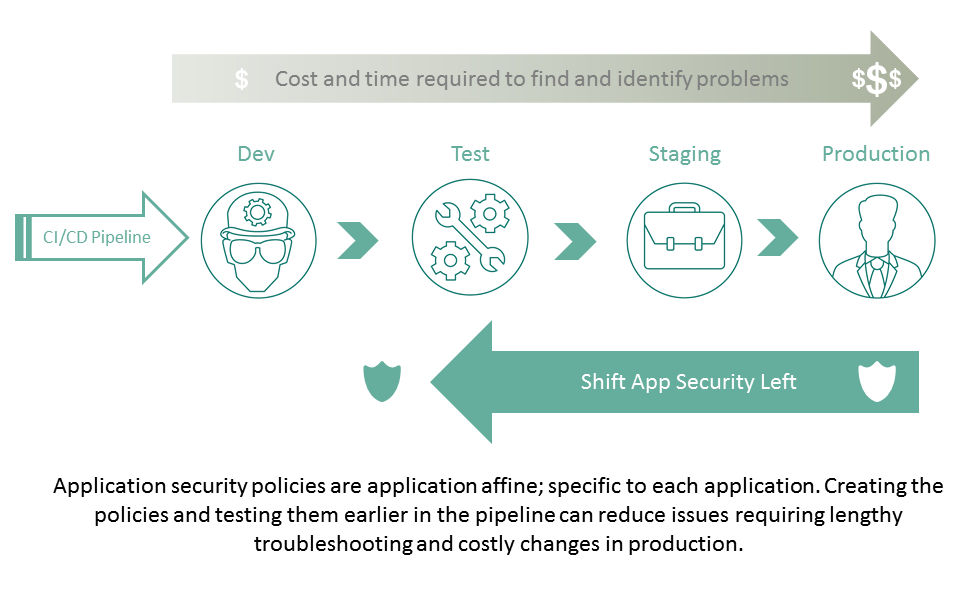

Rather than having developers write code, and then security folks look for vulnerabilities later, Snyk brings security into the code-writing process. This is called "shift left security," because if you laid out the development lifecycle, security is shifting left, or earlier, in that development lifecycle.

And I'll tell ya. The fights I got in with this guy about Snyk. "Developers don't care about security! They just want to write code."

I tried to lay out the conversations we'd had with developers that proved otherwise. "They're telling you what they want to hear."

I explained how 67% of developers have shipped code with known vulnerabilities. "They'll never catch everything! You have to have established security to catch problems anyways, why would you slow down development for security?"

That went on for years. From Snyk's $1B valuation in 2020 until they were valued at $7.5B in 2022. Then, around this time I heard from someone else at this guy's firm that he was on the war path internally. "How could we have missed Snyk? We need to do a post-mortem to understand this clear mishap."

Granted, most investors can't even admit that they're ever wrong. Some, like my acquaintance, were willing to at least say they got something wrong. But it happens once it was effectively undeniable. Almost no one can admit they're wrong in the midst of the fight. As the battle rages on. Why? Incentives. They have a financial incentive invested in the world view that investment demands.

I will say I don't think every investor is just making decisions haphazardly and then slapping a world view on it after the fact. Most investors DO believe the world view reflected in their investments when they make the investment. But, unlike John Maynard Keynes, regardless of if the facts change, their mind will remain unchanged as long as they have dollars at risk.

Nowhere is that more prevalent today than in AI. Not only is there haphazard world views slapped on after an investment is made, but there's also a fierce debate playing out more publicly online than is typical.

The Substack Heard 'Round The X

This week, the fight that put a lot of VCs world views out in the open was a post from John Luttig, a partner at Founders Fund. In it, he took the stance open-source AI will ultimately be inferior to closed-source.

And people had uh 👏 pin 👏 yuns 👏. First off, the core of Johns argument is this:

"Despite recent progress and endless cheerleading, open-source AI will become (1) a financial drain for model builders, (2) an inferior option for developers and consumers, and (3) a risk to national security."

I'm more interested in unpacking the incentives at play behind both the world views of folks like John, and the people who responded to him. But I'll summarize some of his key points this way, because they are important parts of the conversation playing out as people's world views battle it out.

Cost

The key takeaway is that John believes open-source was, maybe once, long ago, a public good, but has mostly become a "freemium marketing strategy." Every company has eventually monetized through paid products:

"Red Hat hid CentOS behind a subscription service, ElasticSearch changed their licensing after accidentally seeding competition, and Databricks owns the IP that accelerates Apache Spark."

He makes the argument that it will be the same with AI. Companies will eventually have to cede their open-source ra-ra feel good vibes, and grow up, and adopt a functioning business model.

"Open-source models have the illusion of being free. But developers bear the inference costs, which are often more expensive than comparable LLM API calls: either pay a middleman to manage GPUs and host models, or pay the direct costs of GPU depreciation, electricity, and downtime."

Inferior Product

Next, he points out that, while open-source AI has been able to reach some performance parity with proprietary models, that these are looking at "the war of yesterday, rather than of tomorrow."

"Frontier models were trained on the corpus of the internet, but that data source is a commodity – model differentiation over the next decade will come from proprietary data, both via model usage and private data sources."

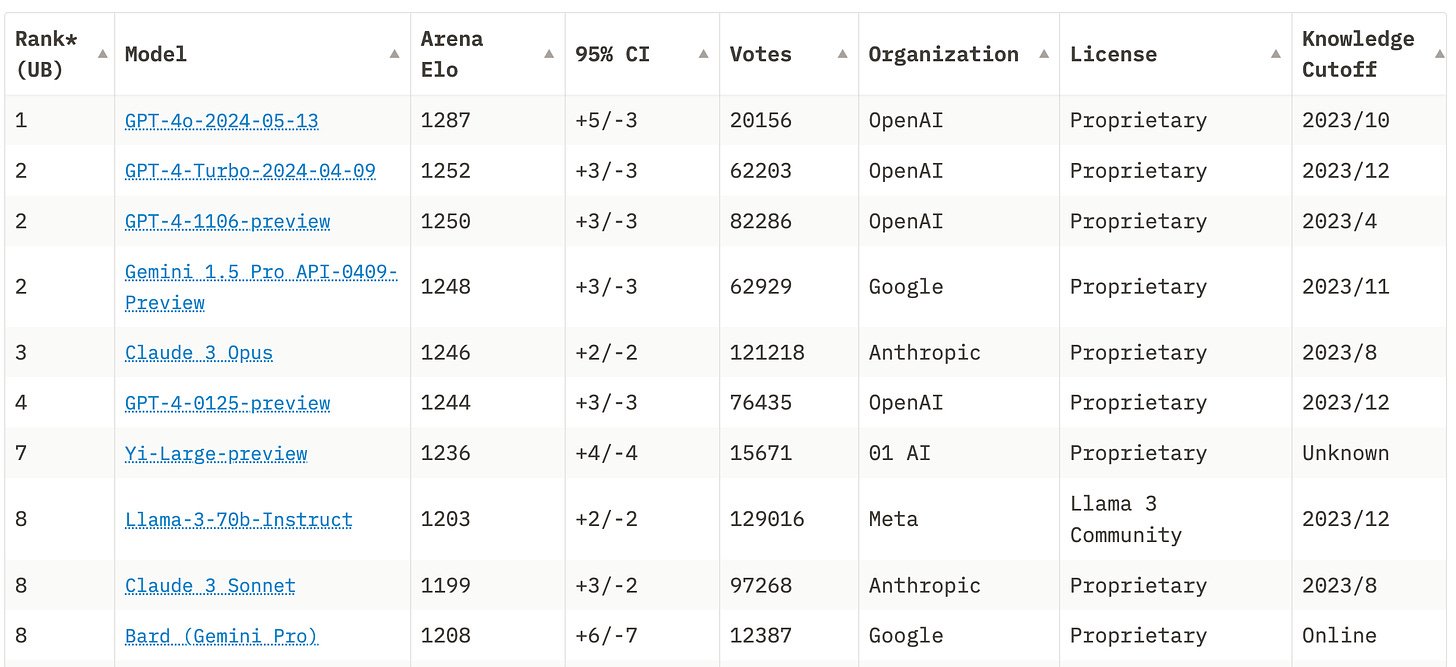

And it's a fair point. While open models from Meta, Mistral, and a few others have occasionally gotten into the top of the performance leaderboard, of the top 10 models right now, only one of them isn't proprietary.

Security Risk

Finally, he makes the point that open-source AI represents a national security risk:

"AI is a technology of hegemony. Even though open-source models are lagging behind the frontier, we shouldn’t export our technological secrets to the world for free. We’ve already recognized the national security risk in other parts of the supply chain via export bans in lithography and semiconductors. When open-source model builders release the model weights, they arm our military adversaries and economic competitors. If I were the CCP or a terrorist group, I’d be generously funding the open-source AI campaign."

And there's certainly credence to this. There have been countless instances of IP theft where China has sought to steal American secrets to expand their own technological capabilities. The Soviets built their first nuclear weapon because Klaus Fuchs stole American secrets. For most of recent technological history, America innovates and other people steal. Making any AI model readily available online puts it right into the hands of nations that are clearly not on our team.

Saying The Quiet Part Out Loud

Cunningham's Law states that the best way to get the right answer on the internet is to post the wrong answer. Similarly, the best way to people to demonstrate their world view on the internet isn't to ask them "what do you think?" It's to post an article like John's and let them draw their own battle lines.

Right off the bat, John makes the point that he believes the people championing open-source AI are all following their own incentives:

"An unusual open-source alliance has formed among developers who want handouts, academics who embrace publishing culture, libertarians who fear centralized speech control and regulatory capture, Elon who doesn’t want his nemesis to win AI, and Zuck who doesn’t want to be beholden to yet another tech platform."

But what's important to note is that the pro-proprietary crowd have their incentives displayed just as clearly. At the end of the post, John reminds people that his firm, Founders Fund, is an investor in OpenAI.

It's important to note that OpenAI has spent heavily on AI lobbying, hired a former US senator, and Sam Altman seems to be on a one-man Terror Tour to scare the crap out of anyone who will listen, calling for the equivalent of "international weapons inspectors" for AI.

It seems to have paid off, because Sam Altman was included on a federal AI advisory panel that, while it includes likeminded folks such as Satya Nadella @ Microsoft, Dario Amodei @ Anthropic, and Sundar Pichai @ Google, it actively excludes the likes of Zuckerberg or Yann LeCunn @ Meta.

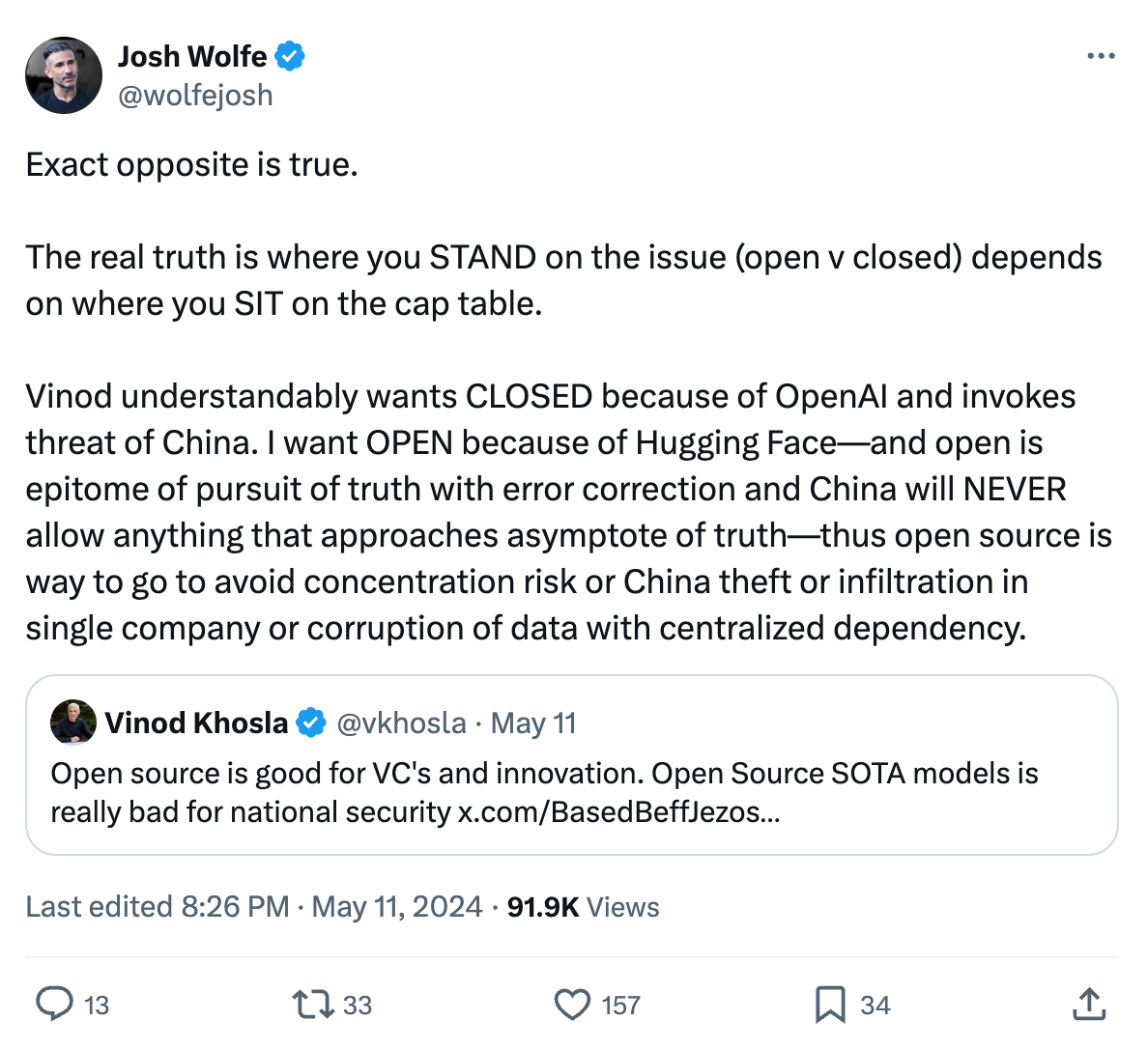

Another big proponent of the pro-proprietary crowd? Vinod Khosla. He's been incredibly vocal about the national security risk of open-source AI.

Once again, it's important to note that Vinod has been investing in OpenAI since it was a non-profit. He has a multi-billion dollar position that is dependent on OpenAI being successful. Ironically, he says "open source is good for VC's and innovation. Open Source SOTA models is really bad for national security." What he fails to add is that while "open-source is good for VCs, closed-source is good for ME as a VC."

Josh Wolfe, on the other hand, comes right out and says the quiet part out loud:

"Where you stand on the issue depends on where you sit on the cap table." And that's effectively what played out in the response to John's initial post.

Mommy & Daddy's AI Fight

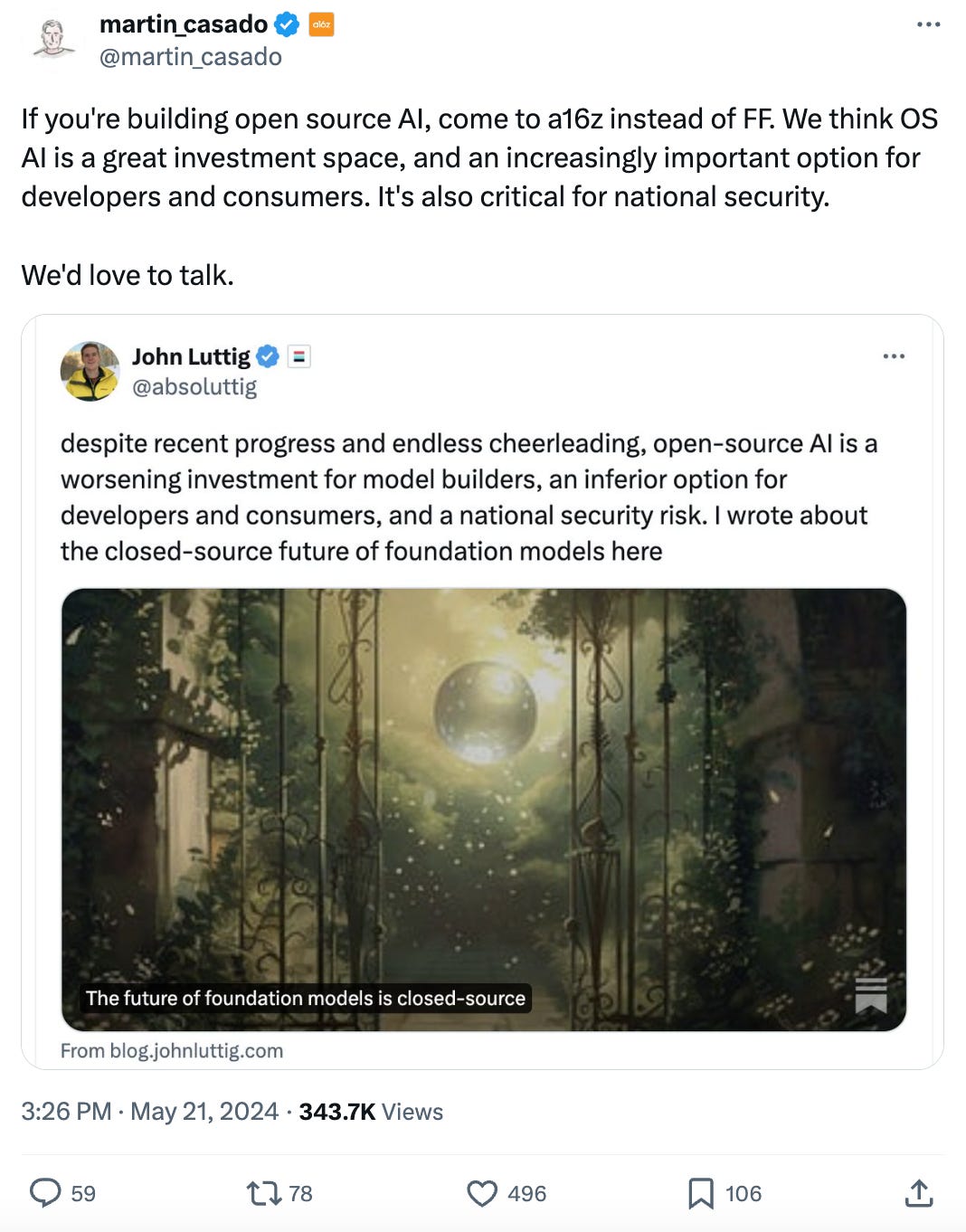

In general, I feel like its rare that VCs come right out and take shots at each other for their company's positions. Granted, I just laid out the Khosla vs. Lux fight playing out on Twitter, so it's getting less rare. With AI, its boiling over more and more. For example, this was Martin Casado's response from a16z:

Martin has certainly been a vocal proponent of open-source AI and anti-AI doomerism and regulation.

Now, here's an important nuance. a16z is actually also an investor in OpenAI, though I can't tell how much. Certainly not a core position, like OpenAI is for Khosla. However, a16z IS a meaningful investor in Databricks, Mistral, Eleven Labs, Replicate, and Character AI. All of whom benefit from AI being more open-source.

The argument went back and forth a few days ago. John pointed out that a16z simultaneously believes the US has to stop China from dominating in AI, but that open-source models should proliferate freely (including across Chinese borders).

Martin's response? Yes and Yes. He claimed those positions aren't inconsistent.

From e/accs like Beff Jezos to YC boss Gary Tan to Epic Games CEO Tim Sweeney and 8VC founding GP, Alex Kolicich to Sarah Guo at Conviction. Everyone had a take.

Now, some people might like the open spirited debate. It's a battle of ideas and let the best ones win. But the main problem with that is that that's rarely the case. When you look at OpenAI with more than $11 billion in a war chest, or when you look at Amazon / Microsoft / Google wading heavily into the war, clearly intent on securing AI as an extension of the Cloud Wars, you realize that there is a lot of money that could drastically tip the scales of an "open and honest debate of ideas."

Fuel on The Fire

My sense is the scale tipping is going to get worse before it gets better (if it ever does). Around the same time as the John vs. Martin debate was boiling out, it was transposed on a similar, but different, conversation about SB 1047. This is a law that was recently passed in California, which "aims to impose rigorous safety and regulatory measures on the development of large-scale artificial intelligence (AI) systems."

The effort feels draconian, unnecessary, and as if it was written by OpenAI to pull the ladder up behind them. If you thought VCs who were worried about AI doomerism could be unreasonable, wait until you see AI doomer regulators.

My concern is less with the merits of the argument, for or against. I have my own perspectives, though I'm certainly no expert. And I enjoy watching people smarter (and dumber) than me play out their arguments in public, so we can all think about the problem from different angles.

My concern with this particular fire, instead, is the complex interweaving between world views, stated beliefs, and financial incentives. People that have large positions at work in ensuring closed AI should cautious before buying into their stated world view. Especially if you DON’T have the same incentives.

Beliefs as Leading or Lagging Indicators

I've written before about this idea of beliefs. This is a quote I've re-referenced over and over and over and over again now. In fact, its probably become the theme of my thinking over the last 12 months or so:

"So often, people trust nuanced tribal group identity and political association without any basis of first principles. I'm not Mormon, or Christian, or Republican, or a Costco member. I am a system of values and beliefs that determine how I act. Group membership should be a lagging indicator of your beliefs, not a leading indicator. When people substitute their own value system with a cookie-cutter platform from their in-group the first thing to die is nuance."

Mike Solana put it a good way in response to the kerfuffle. Granted, I think he was taking a strong counter-positioned stance against the open-source AI folks given their response. But his last line rang true for me across both ends of the spectrum on this (and most other) arguments.

"Almost nobody knows what they think, or why." If there was one crux of what I'm trying to say today, it's this. This is the problem. There are merits to both open and closed AI, and we should have that debate out. We should build and fund companies that act as proxies in that battle, demonstrating the quality of their work and arguments.

But the biggest problem is that people aren't just studying this stuff out in their minds, forming a world view, defining their beliefs, and then engaging in earnest debate for the highest quality ideas. The debate is riddled with financial and professional incentives left and right. And what's worse, a lot of the discourse plays out on Twitter where people may not even connect the dots of the incentives behind the argument.

Very few people understand how Cathie Wood or Chamath Palihapitiya made money off of people believing their bonkers claims on Twitter. And that was bad. What people thought were good-faith discussions online led to dramatic amounts of wealth getting wiped out. And that's bad! That shouldn't just be a cost of doing business. That shouldn't just be a cautionary tale for doing your own research.

There has to be an understanding that everyone's arguments come with an inherent bias. We need to do a better job of internalizing the externalities that go into the technology we buy and build. This is why one of my favorite people to watch is Chris Sacca. He's running a firm with a very clear bias towards a specific outcome: sustainability. But he does it in a way that clearly articulates his incentives. I wrote about this when I profiled them:

"At one point [Chris was asked] a pretty direct question. "Are you going to make more money from climate than you are from tech?" And without missing a beat Chris said, "Absolutely. I'll get way richer from this. I have multiple companies in the portfolio right now that have the trajectory to become multi-trillion dollar companies." Chris' answer is indicative of what I think the fundamental breakthrough is for Lowercarbon: Greed goes further than guilt."I want to sell to people who are acting out of sheer self interest. We have maybe 200 million people who will think about climate when they make a purchase decisions and I need to get 7 billion more. I'm not going to get all these people on the planet to pay a premium out of just consciousness. I just have to offer them a better product. Guilt and shame aren't going to cut it."

He’s not saying, “this is important for everyone, and all our returns are going to charity.” He’s shooting straight. I’m going to make a lot of money from this by working with greedy, self-interested people. But that’s a good thing! If we’re going to make a dent in climate change, we can’t do it just by making people feel bad.

We need more people willing to more openly say "yes, I'm going to make money off of this." Though, I'm getting pretty sick and tired of the concept of exit liquidity. If you have to add to that statement, "yes, I'm going to make money off of this, and the way I'm going to make money is off of you being stupider than me," then log off. I hate you.

Warren Buffett has clearly aligned incentives. If Berkshire Hathaway stock goes up, he's going to make more money. But is he going to make more money off of idiots like you? No. Because he doesn't sell. He's building for the long-term.

VCs are, inherently, incapable of that same long-term alignment. They have investments that have defined (and relatively short) hold periods and funds that have lifecycles. It's almost impossible for VCs to be financially incentivized by overall outcomes. Structurally, the business forces VCs to form their world view and belief around incremental outcomes. What needs to happen for my companies and my fund to do well? Anything outside of that shouldn't be accounted for as motivating factors for them.

And everyone should be aware of that when they watch debates like this play out.

Thanks for reading! Subscribe here to receive Investing 101 in your inbox each week:

Great post. Very aligned. I have always disliked the "public" conversations on twitter but never dug into why. This post helped me understand part of my dislike. Thanks for sharing!

Where you stand on the issue is where you sit on the cap table. Sounds about right